Knock knock – it’s facial recognition for events! You already know that the Event Tech Podcast is all about the latest and greatest technologies. And of course, the ones you can use to make your event go from good to outstanding! For the last few weeks, we’ve explored everything from interactive VR experiences to machine learning and events. This week, we bring you another fantastic trend that’s been creating its fair share of buzz.

Today’s episode is all about facial recognition for events. It’s not something we haven’t covered before, but today we dive into it with a depth we never tried before. And joining our hosts Will Curran and Brandt Krueger is Panos Moutafis. Panos is the CEO and co-founder of Zenus, which provides facial recognition for events. The three will talk about everything you’ve ever wanted to know about the topic, plus a little something extra. Get ready to roll, it’s Event Tech Podcast time!

Facial Recognition – Where Is It?

Facial Recognition – Where Is It?

Panos jumps right into the matter at hand: “For example Facebook they have the tagging feature on photos. One of the differences that you see when it comes to gaining access to a device as your cell phone or your surface for windows, most of the times these devices they require special hardware. iPhone 10 has a 3D scanner on the sensor, on the device, and it only works with iPhone 10. Windows Hello they have a different type of sensor that it only works with the surface”.

Zenus: How Is It Different?

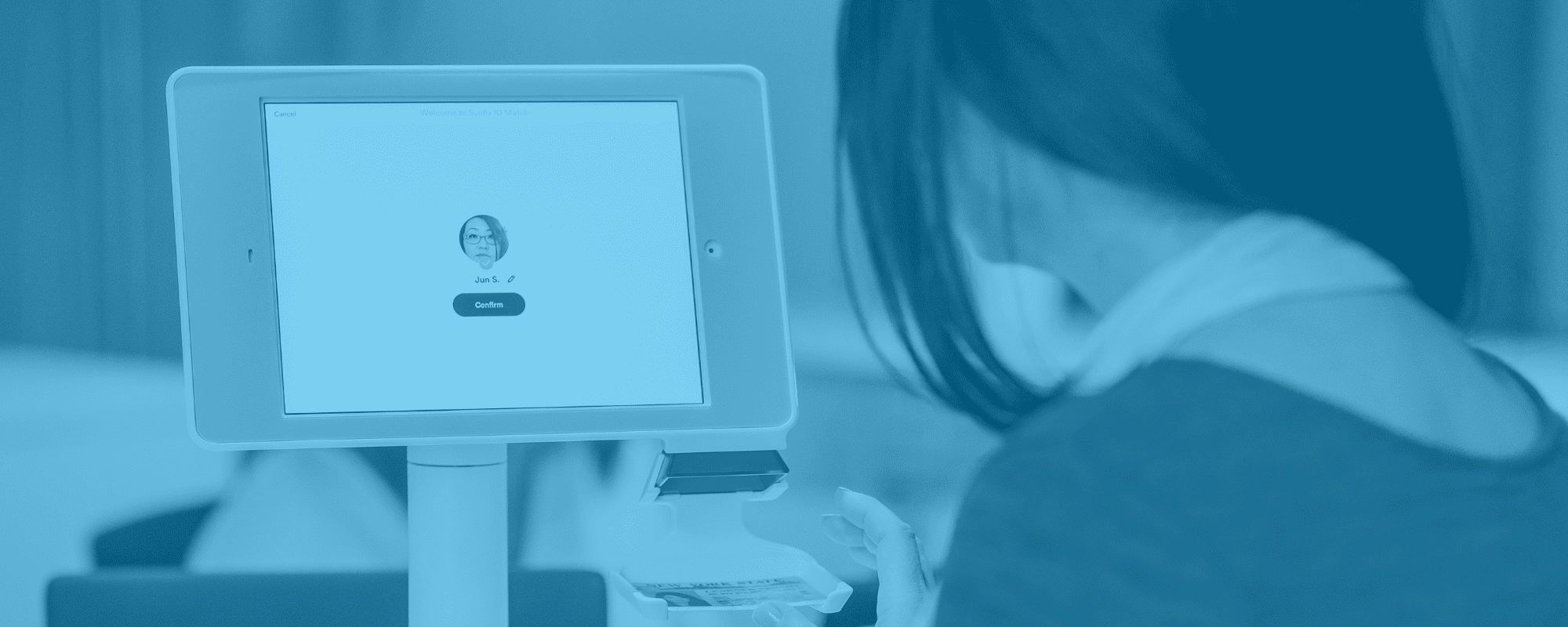

“What we do, which is a little bit different is, our technology works with any device that has a camera. Any cell phone, any regular webcam, any regular camera you have in your devices, tablets, laptops, whatever, we make it work with this equipment”, he explains.

“The second difference is that most of the times, it is, I will get a little bit technical, but it is the difference between what we call one-to-one versus one-to-many metric. So when I have my phone, I store my face in the phone and each time somebody is trying to gain access, we only search against this one template, against this one person. In the events industry, it’s very very different, so what you can do you have an event with 5000 people and you have one picture from each person in the database. And each time someone is trying to check in you have to search the entire database, find the most likely match and then make a decision”.

The Accuracy Issue

“The problem there is the following. When you have a very large database of people, sometimes faces will look alike. So this makes the problem in terms of accuracy much much much harder. And in the real world you have all of this variations, you might have someone who submitted a photo when they were shaved and now they have a beard. Or they took a photo two years ago and submitted this one, and you have all of these variations and you have this very large database with faces that might look alike, so the problems becomes much much more challenging”, adds Panos. So what does this mean? As Brandt puts it, “the whole point is we want to make sure that we have the smoothest experience possible for our attendees”.

Panos says that “one of the tricky things, and this is the answer most people don’t want to hear is it depends. It really depends. Because the size of the database is one factor that impacts accuracy. The other one is you have one, two, or three images per person. The other one is, what is the quality of the pictures themselves? Is it the frontal face, is it a profile view, and so forth. You have all of these different factors that affect accuracy in most cases it’s very very hard to predict. And then you have diversity and so forth”.

How Accuracy Changes

“Typically speaking you have two different header rates. You have false positives, but you also have false negatives. False positive is if I didn’t give my picture before the event, and I go and I’m being recognized as somebody from the database, that’s a false positive. At the same time you have the false negative. I did give my picture, and I’m going in front of the system, and it didn’t recognize me. The tricky part is keeping both of these errors low, very very low. And that’s usually there is a trade-off, the more you decrease the false positive, the higher the false negative. The lower the false negative, the higher the false positive”, says Panos.

He continues: “It’s like a scale. It goes up and down. So trying to keep both of them low, is usually the tricky part and this is where having a very strong technology on the backend, a very strong facial recognition engine, having a know how and how to implement the solutions for the specific application at hand, has a big big impact”.

True VS Perceived Accuracy

“When we first started doing events, and we were collecting pictures from people, if you want to try facial recognition submit your photo. Initially we had some people that were submitting pictures which were not labeled for face recognition. For example, imagine George is going to register and provides a picture of himself and his wife. Then the system doesn’t know who is the person trying to register. Or you have cases where the face is very very small, and is not enough to extract the biometric data and signature. Or somebody, you have somebody submitting a photo of heir dog or whatever or they put an upside down image and so forth”, continues Panos in the issue of accuracy.

Is It Fast Enough?

“Okay. So now then we’ve registered. We are showing up on site. And again just kind of recapping for everyone out there, we’ve got a bunch of different lines, none of them matters, they are all the same. So you go up. And I walk up to a kiosk and it checks my face. And it’s checking against these 12,000 other people. How long does that take?”, asks Brandt.

The answer couldn’t be more satisfying: “It takes milliseconds. It can take a blink of an eye”, says Panos. And what we usually do to minimize the chances of false positives, we take into consideration multiple frames and we make sure that all of them give the same match. And when we have enough of them, then say, “That’s the right person,” but in most cases it takes much less than a second”.

Facial Recognition For Events: How Far Can We Go?

Facial Recognition For Events: How Far Can We Go?

Panos proposes a very tempting scenario: “Imagine the following, you also have a large screen, model somewhere in the venue. And this can be in a different point of interest. And when you go and you stand in front of the screen, there is a camera attached to the monitor, it recognizes you and says, “Hey Will, your next session is there. And this is how you’re supposed to go to this location. Or you have this meeting.”

Personalization

At the end of the day, success is about personalizations of experiences. “What makes the experience, what the users expect, they are expecting this personalization. And the way to do it right, because this is raising the stakes, is you have to know who is the person, you have to know the demographics to see where they fit, but you also have to know how they’re reacting different stimuli in real time. So you want to be able to capture all of these things in real time. And this is what exactly you can receive with face recognition. And here I’m going to go to the next product line we have which is an analytics camera that you can just mount it on a wall and keeps track how many people came by, how long they stayed, and also how they were feeling”, says Panos.

“We can analyze how people are feeling and we are also going to add gender and aids group prediction. So imagine being an exhibitor, and you have all these people coming to your booth, and at the end of the day, you get a report that tells you, this age groups and these genders and this, that were the most engaged of their product. And this one was the least. And this is what you can do to improve it. This is where we are going with that. So everything, that we know on the event will be tied to Face Recognition”.

The Matter of Privacy

Panos assures that when it comes to Zenus, privacy isn’t a problem. “These cameras you cannot buy them in the market. This is something that we are producing. We are manufacturing because they have specialized hardware inside. So, the analytics product line, it has zero privacy implications. So these smart sensors we have developed, they do all the processing on the device. They do not transmit images, they do not transmit video, they do not store images, they do not store video. All they do, they just take the feed from the camera, they process it, and then they extract the statistics on an aggregate level. These are never tied to identity. Nothing. You just get aggregate statistics”, he explains.

So How Does It Work?

“We just get the face, last registration number. Our servers are processing the picture to find the face, quality, the biometric data and so forth, and the picture is immediately discarded. The images are never ever stored on our system. It’s impossible. So what we keep is a very very small face print from each picture which is about 100 numbers each plus the registration ticket”.

So when our system, when we go on site, the day of the event, and we try to recognize something, our system what it returns it returns the ticket number and then the company who is doing the checking on site, they say, “Okay, this ticket number corresponds to this person” and then they’re checking the person. This way, you have a very clear separation between the registration companies that are collecting the information, and Zenus which is doing the biometric process. It’s a very very clear separation. We never get names, never get email addresses, or get any information like that. Don’t store images. Just have the ticket number plus the face print. And our system at the end of the, when the event is over, it will automatically nuke the entire database”, he concludes.

Where Is The Data?

“Most of the events that we are supporting and most of the deployments, everything is on the cloud. And there are very very good reasons for that. It’s much much easier to control security. Somebody can take a device and run. It is much much more scalable because most of the devices, the models that we are using to do this in our calculations they are very complex. So, the existing devices, the existing laptops, and cell phones, they are not powerful enough. Because to get this level of accuracy and this level of speed, at scale, when you have a large database of people and not just like ten people or one person in the database, it’s very very difficult. And that’s why in most of the cases it is required to use the cloud. The other benefit is it’s much more scalable because you can actually see from whatever, you would use any device without having to worry, and then the other thing that we’re doing we have original servers”, says Panos.

“If you are doing an event in Europe, the data will be sent and processed in servers in Europe. You have an event in the U.S, they’ll be in the U.S. We have this kind of mechanism as well to make people feel even more safe about the way their data is being handled. In some occasions, we do have event organizers that they say, “No, I absolutely want to have local processing.” So, in this case, this is what we have done in the past, we can build a server in our office and we ship it to the venue and all the processing happens on the local server”.

How Much Of What Do I Need?

Nothing comes for free, and nowadays, nothing happens without the Internet. So, when it comes to facial recognition, what exactly do you need to have? And how much? Panos lays it down: “So each terminal, each terminal requires less than 1 mbps of upload bandwidth. In most venues the registration lines, they have at least 10 to 15 mbps upload. To give you another example, Starbucks internet for most cases is good enough. That’s how little bandwidth we require. But the other thing that our user interfaces come with is a second space that allows you to very quickly update the settings and internet is slower than expected, you can adjust the settings so that still getting this will have a very very good and fast experience. We can honor these things, we can anticipate all of this service”.

What about money? “So, for the registration piece, especially when you go through a partner that has done all the work and they have integrated it and so forth, it is in most cases just 49 cents per attendee. You have an event with 1,000 attendees, that’s 500 bucks. And that’s it”.

Conclusion

And that’s all for this week’s Event Tech Podcast! A true, hardcore lesson on facial recognition for events. We hope you’re now ready to give it try at your own event, and please let us know what you think. Did the experience run smoothly? Would you be willing to do it again? Is facial recognition for events worth the investment? We’d love your input!